Explainable AI consent humans to understand why conclusion was reached or how machines make decisions or specific judgments.

Explainability of AI (XAI) is fundamental for people to trust, manage and use AI models. People will not use AI systems if they don’t get explainability.

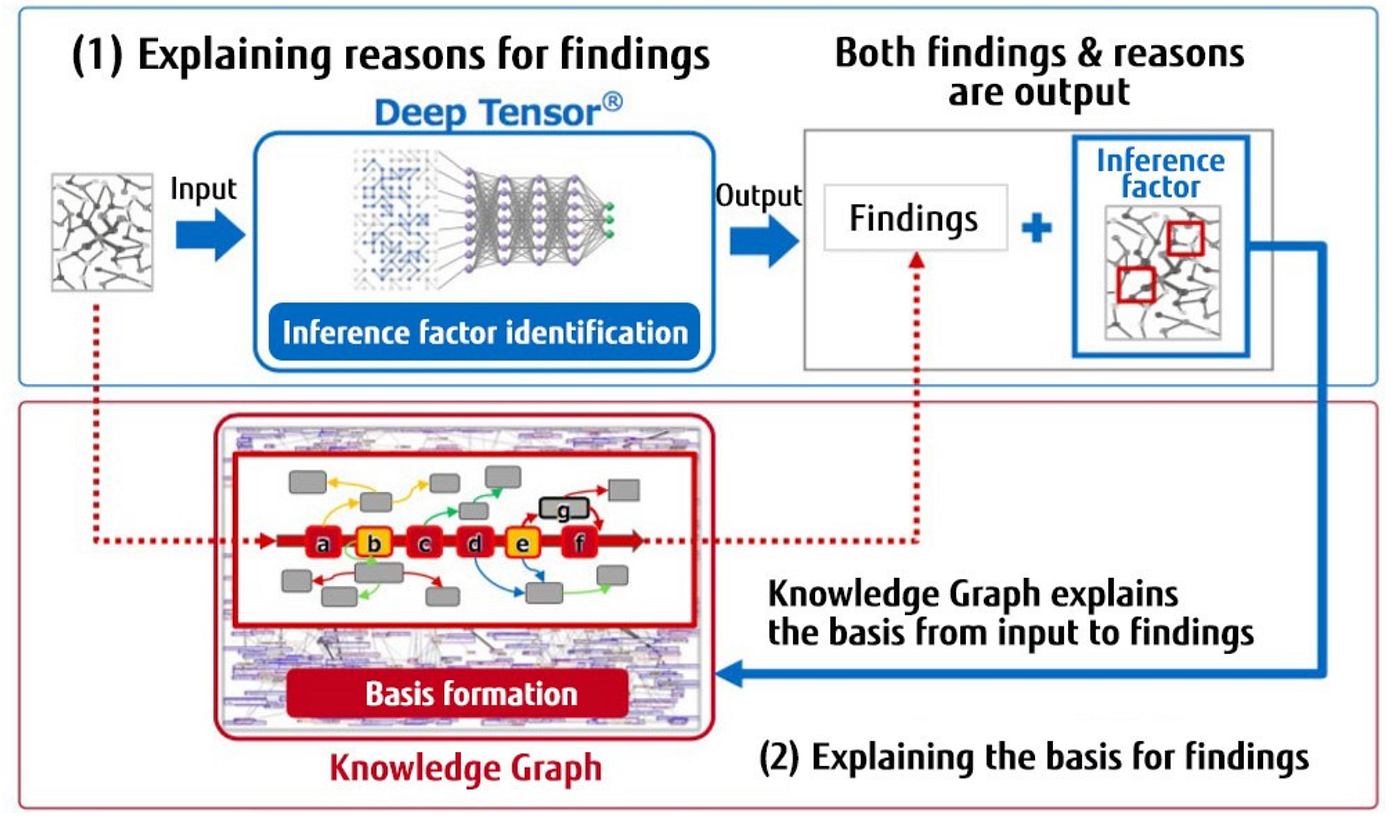

AI is called to present both its judgments and the reasons for them at the same time.

Our Partner Fujitsu Laboratories of America has developed the very first explainable AI, called Deep Tensor. capable of showing the reasons behind AI-generated findings and to make them explainable, allowing human experts to validate the truth of AI produced results and gain new insights.

The very first explainable AI, called Deep Tensor

Deep Tensor with Knowledge Graph is already in use in several mission-critical businesses: health management (health changes are detected from employee activity data for workstyle transformation) and investment decisions in finance (corporate growth is predicted based on KPIs).

For example, in genomic medicine, Fujitsu Laboratories of America has built an AI system by training Deep Tensor by inputting 180,000 pieces of disease genetic mutation data and associating more than 10 billion pieces of knowledge from 17 million medical articles, etc. in Knowledge Graph. Medical specialists simply review the inference logic flow, which greatly cut the time from analysis to report submission (2weeks to 1 day).

A portion of the data used in validating the effectiveness of this technology in the field of genomic medicine was an outcome of joint development with Kyoto University in relation to “Construction of Clinical Genome Knowledge Base to Promote Precision Medicine” in the “Program for an Integrated Database of Clinical and Genome Information” under the Japan Agency for Medical Research and Development (AMED).

Fujitsu’s new deep learning technology connects the AI technology, which performs machine learning on graph-structured data, with graph-structured knowledge bases i.e knowledge graph.

Connecting inferences derived by Deep Tensor to Knowledge Graph enables to understand the reasons behind AI-generated findings and to make them explainable.